Convolutional Neural Networks: Object Detection

Founder & CEO at Azoft

Reading time: 19 min

These days there are so many photo and video surveillance systems in public areas that you would be hard pressed to find someone who hasn’t heard about them. “Big Brother” is watching us — on the roads, in airports, at train stations, when we shop in supermarkets or walk down in the underground. Remote monitoring technologies, photo, and video capture programs are widespread in everyday life but they are also intended for military and other purposes.

The captured visual data comes in large amounts and requires constant support and development of new methods to automate its processing. In particular, digital image processing often focuses on object detection, localization and pattern recognition.

Azoft R&D team has extensive experience dealing with similar challenges. In particular, we have implemented a face recognition system and worked on a project for road sign recognition. Also, we have an experience in implement technology of fully convolutional neural network for semantic segmentation. Today we are going to share our experience on how to do object detection in images using convolutional neural networks. This type of neural networks has successfully proven itself in our past projects.

Project Overview

In the past, the Haar cascade classifier and the LBP-based classifier were the best tools for detecting objects in images. The artificial neural and recurrent neural networks handled the situation pretty well, but had a lot of challenges and problems. With the introduction of convolutional neural networks and their successful application in computer vision, cascade classifiers have become a second-best alternative in image detection field.

We decided to test the practical effectiveness of convolutional neural networks for object detection in images. We chose the car license plate and road sign pictures as the objects of our research.

The goal of our project was to develop several cnn models that render object recognition in images with a higher quality and performance than cascade classifiers. We chose two different ways to approach the situation: using a network that is fully convolutional (all artificial neurons from a single layer connect to every neuron from a connecting layer) and one that is not fully convolutional.

The task is broken into four stages:

- License plate keypoints detection with a CNN

- Road signs keypoints detection with a CNN

- Road signs detection using a fully convolutional neural network

- Comparing cascade classifiers and a CNN performance in license plate detection

The first three stages were executed simultaneously. A single engineer was assigned to each one of them. At the end, we compared the final model of the first stage convolutional neural network to cascade classifier methods by chosen parameters.

The performance of various recognition algorithms is particularly important for modern mobile devices. This is why we decided to test neural networks and cascade classifiers using smartphones.

Implementation

Stage 1. Training neural nets to recognize key points on license plates

Over the years, we have used machine learning for several research projects, and for image recognition we’ve often used training data sets of license plate numbers to “feed” learning algorithms. Seeing as our new experiment required the detection of specific identical objects in images, our license plate database suited perfectly.

First, we decided to teach the convolutional neural network to find keypoints of license plates. This was the purpose of the regression analysis. In our case, pixels of the image were independent input parameters while the coordinates of object keypoints were the dependent output parameters. You can see the example of keypoints detection in the image below.

Training a convolutional neural network to find keypoints demands a dataset with numerous images of the required object (at least 1000 images). Coordinates of keypoints have to be designated and located in the same order.

Our training data set included several hundred images, however this wasn’t enough to train the network. Therefore, we decided to increase the dataset via augmentation of available data. Before starting augmentation we designated keypoints in the images and divided our dataset into training and control parts.

We applied the following transformations to the initial image during its augmentation:

- Shifts

- Resize

- Rotations relative to the center

- Mirror

- Affine transformations (they allowed us to rotate and stretch a picture).

In addition we changed the size of the images to 320*240 pixels. Take a look at an example of augmentation with transformations in the image below.

We chose the Caffe framework for the first stage because it is one of the most flexible and fastest frameworks for experiments with convolutional neural networks.

One way to solve a regression task in Caffe is using the special file format HDF5. After normalization of pixel values from 0 to 1 and coordinate values from -1 to 1, we packed the images and coordinates of keypoints in HDF5. You may find more details in our tutorial (see below).

At the beginning, we applied multiple large network architectures (from 4 to 6 convolutional layers and a large amount of convolution kernels). The models with big architectures demonstrated good results but very low performance. For this reason, we decided to set up a simple neural network architecture to keep the same quality.

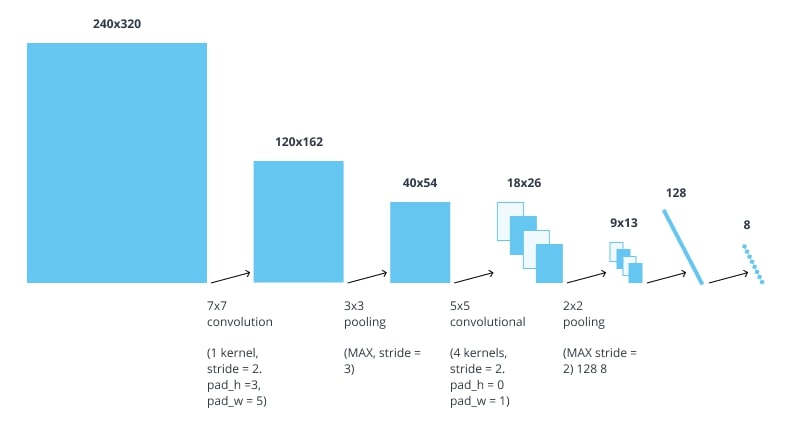

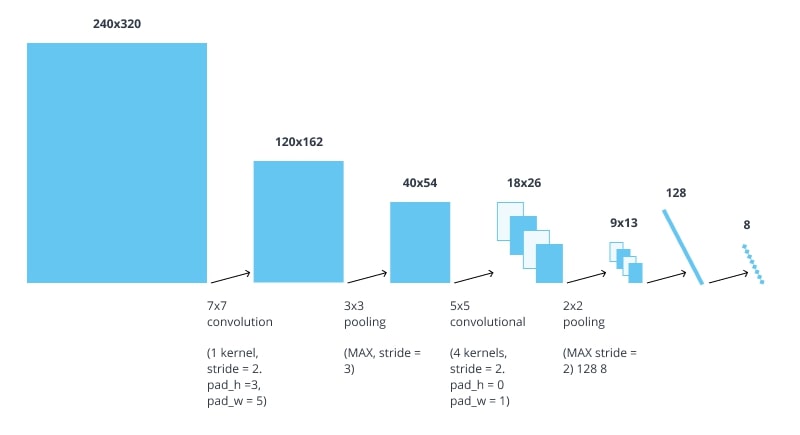

The final convolutional network structure for license plate keypoints detection was:

While training the neural network we used the optimization method called Adam. Compared to Nesterov’s gradient descent, which demonstrated a high value of loss even after the thorough selection of momentum and learning rate parameters, Adam showed better results. Using the Adam method, the convolutional neural network was trained with higher quality and speed.

We got a neural network that finds the key points of license plates quite well if the plates are not very close to the borders. The results are on the images below:

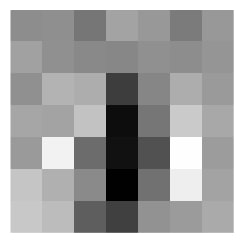

To understand and learn more about the CNN principle, we studied the obtained convolution kernels and feature maps on different layers of the neural network. In the final model, the kernels demonstrated that the network learned to respond to the sudden swings of brightness, which appear in the borders and symbols of a license plate. Feature maps on the images below show the received model.

The trained kernels on the first and second layers are on the following images.

Regarding the feature maps in the final model, we took the car picture (Image 1) and transformed it into the picture with gray color gradation to look at the obtained maps after the first (Image 2) and the second (Image 3) convolutional layers.

Image 1 – The unedited car picture

Image 1 – The unedited car picture

Image 2 – The feature map after applying first convolutional layer

Image 2 – The feature map after applying first convolutional layer

Image 3 – The feature map after second convolutional layer

Finally, we designated the received coordinates of the key points and succesfully got the desired image:

The convolutional neural network was very effective in this recognition task detecting keypoints of license plates. In the majority of cases, the key points of license plate borders were marked correctly. Therefore, we can highly praise the productivity of the convolutional neural network. The example of license plate detection using an iPhone is available in the video.

Stage 2. CNN in road sign key points detection

While teaching a convolutional neural network to find license plates we simultaneously launched a training process for a similar network to find road signs with speed limits. We also implemented experiments on the base of the Caffe framework. To train this model we used a larger dataset with nearly 1700 pictures of signs, which were augmented to 35000.

This way we trained a neural network to find road signs on images of 200х200 pixels.

When we tried to detect road signs with a different size on the image the problem appeared. As a result, we’ve conducted experiments based on simpler features. A network had to find a white circle against a dark backdrop. We also kept the same image size of 200×200 pixels and made the fixed parameters for the circle size in variation up to 3 times. In other words, the minimum and maximum radius of circles in our dataset differed from each other by 3 times.

Finally, we achieved an appropriate outcome only when the radius of the circle changed less than 10%. Experiments with gray circles (the spectral range of gray from 0.1 to 1) also demonstrated the same result. Thus, it is an open question as to how to implement object detection when the objects have a different size.

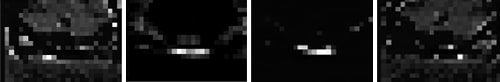

Examples of the last successfully tested model are shown on a group of pictures below. As we can see, the network learned to distinguish between similar signs. If the network pointed at the image center, then it didn’t find an object.

Regarding the detection of road signs, the convolutional neural network demonstrated good results. However, different sizes of objects became an unexpected obstacle. We plan to come back to the search of the solution to this problem in future projects, as it requires detailed research.

Stage 3. Fully convolutional neural network in road sign detection

Another model that we trained to find road signs was a fully convolutional deep neural (having multiple convolution layers) network without fully-connected layers. This time the input images for the fully convolutional network were of a specific size, which transformed to a smaller size images at the output. In fact, the network is a non-linear filter with resizing. In other words, the neural network increases the sharpness by removing noise from particular image areas without edge smearing but the size of the input image is reduced in the process.

The brightness value of pixels is equal to 1 in the output image, which contains the object. Any pixels outside of the image have a brightness value equal to 0. Therefore, the brightness value of pixels in the output image means the probability of that pixel belonging to the object.

Consider that the output image is smaller than the input image. That’s why the object coordinates have to be scaled proportional to the output image size.

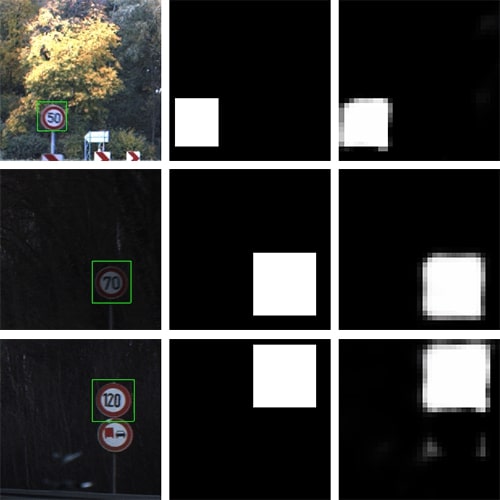

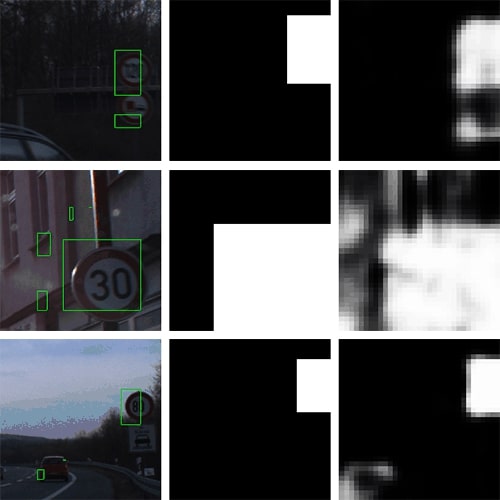

We chose the following architecture to train the network: 4 convolutional layers, max-pooling (reducing size via choice of the biggest one) for the first layer. We trained the network using a dataset which is based on images of road signs with speed limits. When we finished training and applied the network to our dataset, we made binarization and found the connected components. Every component is a hypothesis about the sign location. Here are the results:

Next, we divided images into 3 groups of 3 pictures (see group of images below). The left side of the image is the input. The right side of the image is the output. We labeled the central image, which shows the result we would like to get ideally.

We applied the binarization to the input images with a threshold of 0.5 and found the connected components. As a result, we found the desired rectangle that designates the location of a road sign. The rectangle is well seen in the left image and the coordinates are scaled back to the input image size.

Nevertheless, this method demonstrated both good results and false positives:

Overall, we evaluate this model positively. The fully convolutional neural network showed about 80% of correctly found signs from the independent testing sample. The special advantage of this model is that you can find the same two signs and label them with a rectangle. If the picture was signless, the network won’t mark anything.

Stage 4. License plate detection: Сascade classifiers vs CNN comparison

Through earlier experiments we came to the conclusion that CNNs can be compared with cascade classifiers, even outperforming them in some cases.

To evaluate the quality and performance of different methods for detecting objects on images we use the following characteristics:

Recall and precision in object recognition

Both the convolutional neural networks and Haar classifier demonstrate a high level of precision and recall for detecting objects in images. At the same time, the LBP classifier shows a high level of recall (finding the object quite regularly) but also has a high rate of false positives and low precision.

Scale invariance

Whereas the Haar cascade classifier and the LBP cascade classifier demonstrate strong invariance to changing the scale of the object in the images, the convolutional neural network sometimes cannot and thus shows the low scale invariance.

Number of attempts before getting a working model

Using cascade classifiers, you need a few attempts to get a working model for object detection. The convolutional neural networks wouldn’t yield quick results. Deep learning takes time – to achieve the goal you need to perform dozens of experiments.

Time required for image processing

A convolutional neural network does not require much time to process images. And the LBP classifier also doesn’t need a lot of processing time. As for the Haar classifier, it takes a significantly longer time for processing.

The average time spent on processing one picture in seconds (not counting the time for capturing and displaying video):

Consistency with tilting objects

Another great advantage of the convolutional neural network is the consistency with tilted objects. CNNs can recognise an object from the image in almost any position. Neither cascade classifiers nor LBP are consistent with tilted objects.

Final results

To summarize, we can say that convolutional neural networks are equal or even better than cascade classifiers for some parameters. However, to achieve better results neural networks must be trained multiple times and there is still a problem the object scale can’t change a lot.

Which recognition systems are better: Convolutional Neural Networks or Cascade?

While solving the problem of specific object recognition in images, we applied two CCN models. The first convolutional network finds the object’s keypoints in images. The second one recognizes the objects in images.

Each of the experiments were very labor-intensive and time-consuming. Once more we were convinced that the learning methods for convolutional neural networks are complicated and require more investment to obtain reliable results.

The comparative analysis of cascade classifier methods and the trained convolutional neural network model confirmed our main hypothesis. The convolutional neural network allows localizing objects faster and with higher quality than cascade classifiers if the object won’t change in scale very much. To solve the problem of the low scale invariance, we will try to increase the number of convolutional layers in future projects and use the most representative training datasets.

Comments