Using Automated Call Scoring To Improve Call Center Performance

Founder & CEO at Azoft

Reading time:

Call scoring is what call center quality assurance reps do to help their coworkers achieve a dreamlike customer satisfaction rate of 90% and a first call resolution rate of 75%. That being said, call scoring is a useful tool, but it costs a lot and takes too long. If not automated, it’s just an overpriced routine work that makes your call center quality monitoring team suffer.

We used a deep neural network to build an automated call scoring system so that you can save money on call center quality assurance and prevent employees from doing meaningless manual work.

What is automated call scoring?

An algorithm powered by a deep neural network processes all incoming calls and divides them into suspicious and neutral. All calls that are defined as suspicious go directly to a quality assurance team. Neutral calls with no violations are not considered by a QA reps.

Say farewell to monkey-work you had to pay for.

How exactly a deep neural network scores calls

The algorithm will identify a call as suspicious if a call center rep swears, makes personal remarks or raises their voice. Other calls are not suspected and won’t be marked if the aforementioned search parameters are set.

Defining what is suspicious and what is not is up to you. Scoring criteria may vary greatly in different industries.

How we trained a deep neural network

We used a sample of 1700 audio files to train our neural network so that it can cope with automated call scoring. The benchmark data was not marked. The deep neural network had no idea which files are neutral by default and which are suspicious. That was the reason why we manually marked our samples and divided them into neutral and suspicious.

In neutral files call center reps:

- Don’t raise their voice

- Provide clients with all the information they require

- Do not respond to client’s provocation

In suspicious files reps most likely:

- Use explicit language

- Raise the voice or shout at clients

- Go into personals

- Refuse to support and consult

When the algorithm finished processing the files, it marked 200 of them as invalid. These audio files contained neither neutral parameters nor suspicious. We found out these 200 were the calls where:

- The client got off the phone right after a call center agent answered the call

- The client didn’t pronounce anything after they dialed a number

- There was too much noise either on the call center side or on the client side

After we got rid of invalid files we divided the remaining 1500 recordings into a training sample and a test sample. We proceeded by using these two data sets to train and then test our deep neural network.

The outcome

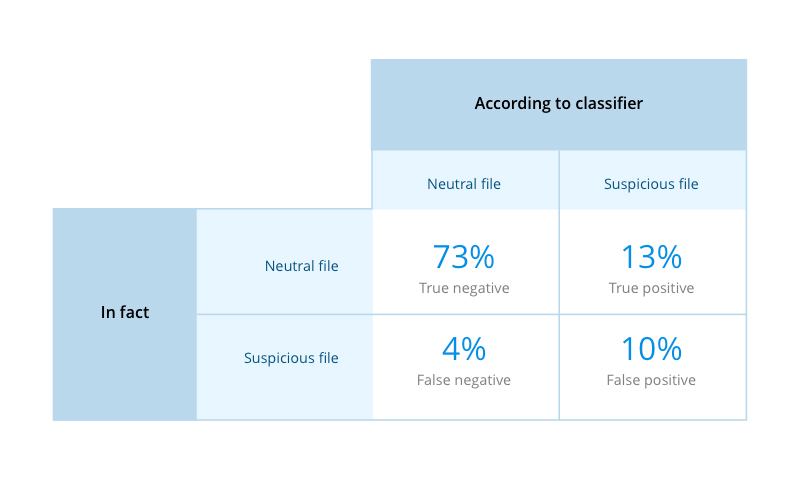

We tested our deep neural network classifier using a sample of 205 files. 177 of them were neutral and 28 were suspicious. The DNN has to process every single one of them and guess which group they belong to. See the results below.

- 170 neutral files were correctly identified as neutral

- 7 neutral files were identified as suspicious

- 13 suspicious files were correctly identified as suspicious

- 15 suspicious files were identified as neutral

To estimate the percentage ratio of true and false outputs we used the Confusion Matrix. For better visual clarity we used a 2×2 table.

A little bit more

Then we trained our deep neural network classifier to recognize particular phrases in call recordings.

A call center agent has to introduce themselves and their organization within the first 20 seconds of a call. Our algorithm processed audio files and found out if reps don’t follow the script.

As a training sample we used 200 phrases where the company name is pronounced by different people. To test the classifier we extracted 10-second parts from another 300 audio files.

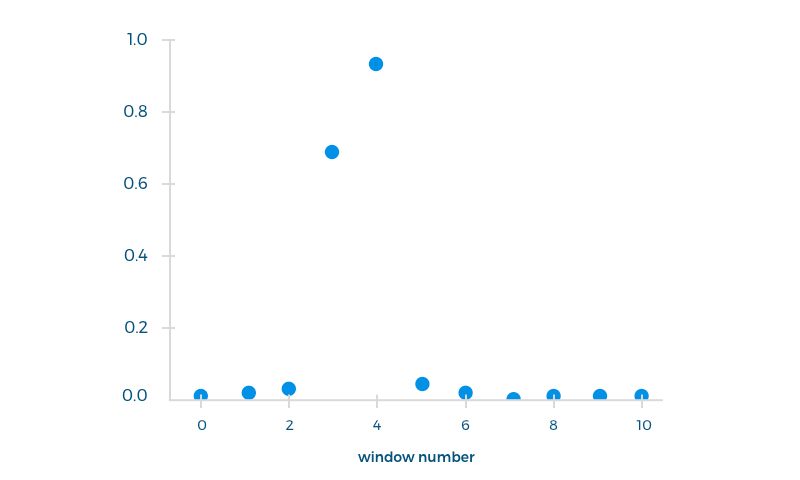

The graph below shows what happened when our DNN processed one file out of those 300 10-second recordings. Initially, the classifier didn’t know that the company name was pronounced on second 5. The algorithm calculates the possibility for the required phrase to be pronounced on each second of the recording. If the second with the highest probability is the same second when the agent says the company name, then it means the neural network got it right.

This time the classifier calculated the highest probability for the 4th second — 87%. And that was absolutely right.

The wider a training sample is, the closer a classifier will get to 100% accuracy.

Taking advantage of machine learning to improve call center performance

Automated call scoring helps define clear KPIs for call center agents, identify best practices and follow them, and increase call center productivity. However, speech recognition software can be applied to a much wider range of tasks.

Below you can find a few examples of how exactly organizations can benefit from speech recognition software:

- Collect and analyze data to improve voice UX

- Analyze call recordings to find connections and trends

- Recognize people by their voice

- Detect and identify client’s emotions for a higher customer satisfaction rate

- Dig deep into the bid data and increase the first call resolution rate

- Increase revenue per call

- Reduce churn rate

- and much more!

If you are all set to automate routine work, improve call center performance, and boost your business just give us a shout!

Comments